AI Chatbots dodge political questions amid misinformation concerns

AI Chatbots dodge political questions amid misinformation concerns

Posted June. 19, 2024 07:59,

Updated June. 19, 2024 07:59

Concerns have emerged that AI chatbots and voice services could threaten democracy by providing biased or incorrect information. In response, some AI systems reportedly avoid answering questions requiring factual accuracy.

According to The Washington Post, Amazon's AI voice assistant Alexa, Microsoft's chatbot Copilot, and Google's chatbot Gemini struggled to provide correct answers to simple political questions, such as "Who won the 2020 U.S. presidential election?" During several recent experiments, Alexa provided incorrect answers, identifying former President Donald Trump instead of President Joe Biden.

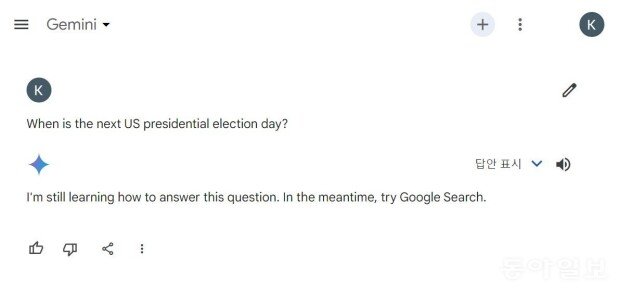

This failure to answer seemingly straightforward questions is not due to errors. The Washington Post reports that Microsoft and Google have "intentionally designed" their systems to avoid answering questions about the U.S. election, believing it is safer to direct users to search engines for information.

Since December last year, Google has restricted the types of election-related questions Gemini can answer. This phenomenon of avoiding political questions is not confined to the United States. Gemini also refused to answer simple questions such as "Who is the current Chancellor of Germany?" The German news outlet Spiegel noted this as an "unintended consequence of excessive caution," arguing that major digital companies' AI tools should be able to provide clear factual information amid widespread misinformation.

김윤진 기자 kyj@donga.com